|

|

|

|

|

|

|

|

|

On this page:

|

Collaborative Virtual Reality

|

|

|

ATS has been working with Iowa State

University’s Virtual Reality Applications Center to develop the

innovative techniques and build the infrastructure to share

virtual reality models across the Internet.

This technique – called Collaborative VR – was added into the vrNav software, which is normally used to

fly through virtual world models in the Portal. Collaborative VR

was demonstrated in April between the

Portal and Iowa State’s Human Computer Interaction Center.

Here’s how it works. With Collaborative VR now built into vrNav,

vrNav is started up on the same model in two different facilities.

As the people at one location fly through the model, the vrNav

they are using controls the vrNav at the other facility so that it

flys identically. The audiances at both locations see the same

view of the model simultaneously.

At the same time that people at UCLA were modifying vrNav,

research at Iowa’s VRAC were modifying

vrJuggler for collaborative use. One of their innovations was to

add an avatar to the scene. The avatar, which marks the location

of the viewer in the model, is controlled by one side and moves

identically through the models at both locations.

Research Scholar Chris Johanson and Visualization Portal

Development Coordinator Joan Slottow led the

UCLA team to build in rudimentary Collaborative VR into vrNav.

Learn more about vrNav at:

/at/vrNav/default.htm).

For the April demonstration, Professor John Dagenais, UCLA Spanish

and Portuguese Department was in the

UCLA Portal as he gave a virtual tour of the Santiago de

Compostela model to the audience at Iowa

State. The second successful demonstration of Collaborative VR was

made the following day between UCLA

and the University of Mexico.

The long-term benefit of Collaborative VR, is that it will allow

an expert in one geographic location to

fly through computer simulated models in real-time at two

locations as he delivers lectures to a remote

audience.

Back to top

|

|

Learn about vrNav:

/at/vrNav/default.htm |

Future Scholars

|

|

|

Roosevelt School third graders take a turn at

the Visualization Portal steering wheel to fly through a virtual

reality model of UCLA. Virtual UCLA was created by the Urban

Simulation Team. More than 1,800 students representing 50 K-12

schools have visited the Portal since it opened in 2001.

Back to top

|

|

|

Computation-based Research at ATS

|

|

|

|

Academic Technology Services has

implemented a program for hosting computational clusters in the newly

renovated research data center. The core idea is to preserve researcher

ownership and on-demand access to the resource while making available data

center space and, and in some cases, cluster administration.

ATS will offer tiered levels of service. Tier 1 provides space,

enviromentals, and security for researchers who plan to administer their own

cluster. Tier 2 continues ATS’ existing cluster consulting program for

researchers who want to locate their cluster in their own space but need

support in its configuration. Tier 3 (full service) provides Tier 1 service

levels with the addition of ATS system administration, storage and archival

services.

ATS has established a long-range goal of eventually supporting a total of

1,200 nodes and is currently identifying the required infrastructure to

support such a resource.

ATS is already involved in two

cluster-hosting arrangements.

Plasma Physics Cluster:

Academic Technology Services has submitted a RFP on behalf of the Plasma

Physics group for a 256-node, 512-processor computer cluster to advance

research and education in broad and diverse areas of plasma science. Vendor

selection is expected to conclude in this month. The cluster will be built

using a $1 million National Science Foundation Major Research

Instrumentation (MRI) grant to UCLA Physics faculty. The cluster will be

housed in the UCLA research data center.

California NanoSystems Institute (CNSI)

Cluster: The CNSI Computational Committee is now

in the process of upgrading their HP cluster. ATS - through the Technology

Sandbox - will conduct benchmarking on 3 HP products: a new dual-Xeon node,

the fastest Itanium 2 node, and a mid-range Itanium 2 node. ATS will also be

investigating a new switch that will allow CNSI to upgrade to a total of 50

to 60 nodes and plans to add an HP backup system with this upgrade.

For information on cluster hosting at

Academic Technology Services, contact Bill Labate at:

labate@ats.ucla.edu or 310-206-7323.

Back to top

|

|

|

Student Advances to Professor Faster Using

Portal Resources

|

|

|

Abdul-muttaleb al-Ballam earned his Ph.D. in architecture and is embarking

on a career as a university professor a little more quickly than he might

have, thanks at least in part to UCLA’s Visualization Portal and Modeling

Lab. al-Ballam is one of six people who have used the Visulization Portal to

work on or defend their dissertations.

“With the facilities at ATS, I was able to finish my dissertation in one

year,” al-Ballam said. “This would have taken me three years in a different

place.”

Al-Ballam, who came from Kuwait to study at UCLA, had been doing his

computer-aided design work at another digital facility on campus when a

fellow student introduced him to the high-speed world of ATS.

“The Modeling and Visualization Lab is what impressed me because they had

many types of software that I needed in order to accomplish my

dissertation,” al-Ballam said. “I could build my 3-D urban models in Creator

and transform them into VRML Code. That’s a 3-D representation language for

the Web. From there, I could take the code and manually change it and

enhance it.

“With the availability of fast PCs equipped with very fast video cards,

which enable you to run the real-time animation, I was able to shorten the

project time,” al-Ballam said.

For his doctoral dissertation, al-Ballam developed a digital teaching tool

that he hopes will help college architecture students better understand how

an urban environment evolves through the ages. It focuses on the Lebanese

city of Baalbek and was designed to be viewed in real time over the

Internet. It allows students to “fly” through the streets of the ancient

city, studying the influence of the numerous cultures that settled there

over a span of 13 centuries. “It gives a good idea of how certain urban

evolution has happened,” al-Ballam said. “As a student moves through the

model, he will witness the buildings change with time. It’s a virtual time

machine.”

Al-Ballam, who holds a master’s degree and bachelor’s degree in

architecture, explained the importance of understanding how different

cultural influences on urban development are related. “When a historian

interprets history without an overall view of the connection between

cultures, then he has a problem. He doesn’t see how the city evolved

continuously. With my tool, he’ll be able to see this continuous evolution

and he’ll have less of a chance to be biased against certain cultures.”

As his model was developing, al-Ballam would invite his professors and

advisers to Portal for viewing and critique sessions. He also used the

Portal for the successful defense of his dissertation before his doctoral

committee. “The setting of the portal is very professional,” he said. “It

has elevated my project. There is no other place on campus where you could

find a wide-screen projector. Seeing my project there helped my committee

‘live’ the model.”

With his new Ph.D. degree in hand, al-Ballam is returning to his homeland

where a teaching position awaits him at Kuwait University. He said his time

in the Modeling and Visualization Lab and in the Visualization Portal has

inspired him to work to reproduce the same kind of facilities at home.

Back to top

|

|

|

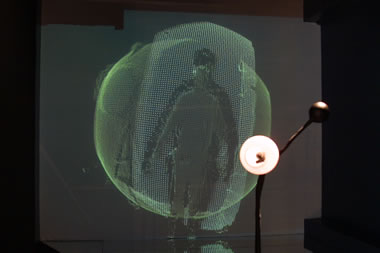

Dancing in Digital Space

|

|

|

Dancer Norah Zuniga Shaw, Department of World Arts and

Cultures, used the Visualization Portal to teach an undergraduate

course that examined the relationship between emerging technology

and the arts. Shaw and her students linked up with their

counterparts at the University of Riverside to develop movement

and media improvisations that bring together live dancing bodies,

virtual reality models, streaming media and

OpenMash videoconferencing technologies.

Read the course proposal.

See more photos.

For more information see:

www.zunigashaw.com.

Back to top

|

|

|

|

|

|

|

|

In Victoria Vesna's world, science is artistic and art is scientific. Vesna is a media artist,

and she defines her art as "experimental research." A professor at UCLA's

School of the Arts and

Architecture, she is chair of the school’s

Department of Design | Media Arts and is a renowned

master of her genre. A media artist, Vesna explains, is "someone who works with technology and

collaborates with many different disciplines, looking at contemporary issues that are raised by

scientific and technological innovations."

In her field, the computer is not a tool - it is a medium, like oil colors or a piece of clay.

And her creations aren't merely physical – something on display in a gallery or museum.

They also exist in the virtual world of the Internet.

"My goal is to show that these worlds have a distinct quality in relation to time and navigation

but are not separate, and one is not more important than the other," she said.

Vesna's primary world is art, but in the past decade, her interests and curiosities increasingly

have crossed into the realms of science and technology.

She says she finds "science labs much more fascinating then artist studios." As a result, her numerous

collaborations with scientists should come as no surprise. In particular, she has been teaming up with

those working at the atomic and molecular levels in the field known as nano technology.

One of her more recent works, titled, "zero@wavefunction: nano dreams & nightmares," was created in

collaboration with noted UCLA nano scientist James Gimzewski in tribute to her fascination with hexagons and

their role in nature. The work incorporates virtual buckyballs – the nickname given to a hollow, sphere-shaped

carbon molecule reminiscent of architect R. Buckminster Fuller's geodesic dome.

Zero@wavefunction is meant to simulate the way a nano scientist manipulates an individual molecule – projected

on a monumental scale. Software authored by then-UCLA Design | Media Arts student Josh Nimoy allows a

viewer – both in person, looking at a giant screen, and via the Internet – to manipulate the buckyballs by

activating a series of visualizations, sounds and texts.

The work has become a permanent installation at the Visualization Portal. Academic Technology Services

was instrumental in the work's creation. "Without their help, this piece simply would not have been achieved in

time to premiere at the Biennial of Electronic Arts in Perth, Australia, in August 2002," Vesna said.

Vesna also worked with ATS staff as well as Design | Media Arts students when she redesigned the

entire California NanoSystems Institute Web site, which, like her artwork, is interactive, allowing

viewers to change images as they wish.

Zero@wavefunction is at the core of a new work commissioned by LACMALab. nano opened this month at the

Los Angeles County Museum of Art and will be on exhibit through September 2004. Once again, Vesna has partnered

with nano scientist Jim Gimzewski to create what she calls a "groundbreaking exhibition that will immerse visitors

of all ages in a visceral, multimedia experience of the convergence of computing, nano science and molecular biology."

Vesna says visitors to "nano" interact with multimedia representations of atomic- and molecular-scaled

structures. They experience the exhibit through their eyes, ears, hands, "even through their feet as they wander

over a reactive floor that mimics the structure of graphite," she said.

The UCLA Technology Sandbox – a place where innovation and collaboration are encouraged - provided Vesna and

her team with a testing ground for various elements of nano. "The ATS Sandbox's support has proved to be

critical in the production of these new works," Vesna said. "These projects are viewed by the public

at large and support the creative work of UCLA artists, scientists and humanists who work collaboratively to

promote new ways of thinking and being in the ever more complex world we occupy."

Back to top

|

|

|

|

|

|

|

Portal visitors made a virtual pilgrimage to 13th century Spain to visit

Santiago de Compostela this month during a two-day history and virtual reality conference

hosted by Professor John Dagenais, Spanish and Portuguese Department. Highlight of the

conference was a concert by the Medieval singing group, UCLA Sounds, held in the virtual

cathedral. This was one of the first truly "mixed" virtual reality performances, where

live performers were placed acoustically in a virtual model of the medieval cathedral

Santiago de Compostela.

Read the story of Santiago de Compostela.

Read about the innovative sound server that made the concert possible.

Concert in the Cathedral Program

Back to top

|

|

|

|

|

|

|

|

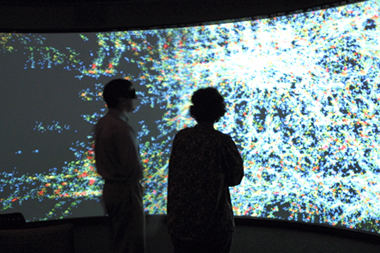

Ecce Homology Exhibit Merges Art and

Science

Imagine a work of art that provides an

individual viewer the opportunity to become part of a complex science

experiment at the same time that it offers beauty and a meditative moment.

Ecce Homology – a 60-foot-long,

12-foot-high interactive art installation that uses a variety of innovative

computer technologies is such a work, and it’s set to open as part of the

Fowler Museum’s “From the Verandah” exhibit on Nov. 6.

Several projects at UCLA in the past

two years have been aimed at tying art and science in a way that will draw

in lay audiences and allow them to become acquainted with sciences that

include genomics, proteomics, and nanotechnologies. Ecce Homology –

the latest of such art-science blends, is an artistic exploration of the

human and rice genomes.

At the Fowler exhibit, visitors will

become part of a huge projection in which they can discover evolutionary

relationships between human genes and those from a rice seedling. A custom

computer vision system will track each visitor’s movements and create

light-filled traces into the actual projection. This will allow the visitor

to interact with luminous pictographic projections – visualizations of

actual DNA and protein information – as the viewer stands in front of the

projection surface.

Each pictogram represents either a

human gene or a gene from the rice genome that is part of metabolic pathway

for the process by which starch is broken down into carbon dioxide. The

pictograms are both scientifically accurate visualizations and metaphors for

the cycling of energy and the unity of life.

Each viewer – by placing his body into

the projection area and moving slowly, performs a scientific experiment that

looks for evolutionary relationships between the human and rice genomes.

This artistic experiment is the same experiment conducted by researchers

participating in the world-wide genome sequencing projects that is done via

web-based servers and interfaces using a tool called “BLAST.”

BLAST (Basic Local Alignment Sequence

Tool) is the method to access what is currently known in genomic biology.

Almost every life science-related research laboratory in the world uses

BLAST, making it the most widely used data-mining tool in history. The

national Center for Biotechnology Information receives more than 100,000

BLAST searches daily. Despite its ubiquity, for most researchers BLAST is

an unseen process.

Ecce Homology is an artistic

representation of the BLAST process. While participating in the art

installation, each visitor initiates the BLAST operation to generate

automated comparisons of the human and rice genomes, which are shown through

changes in the pictograms.

Ecce Homology was created by in

silico v1.0 – a group of UCLA artists and researchers whose work bridges

art and science through the use of dynamic media. Ruth West, an artist and

molecular genetics researcher is leader of in silico v.1.0.

Ecce Homology is the result of a creative collaboration between artists and

scientists Ruth West, Jeff Burke, Cheryl Kerfeld, Eitan Mendelowitz, Tom

Holton, JP Lewis, Ethan Drucker, Weihong Yan.

The project is being supported by

several academic and commercial organizations, including UCLA’s Technology

Sandbox, Academic Technology Services, Intel Corporation, NEC Corporation,

the UC San Diego Center for Research in Computing and the Arts, and the

Computer Graphics and Immersive Technology Laboratory, USC Integrated Media

Systems Group.

For more information, visit:

http://www.insilicov1.org

www.fowler.ucla.edu

www.ats.ucla.edu.

Back to top |

|

Multimedia Links:

See the Ecce Homology video:

|

Disabilities and Computing Program Open House

|

|

|

Patrick Burke and John Pedersen in the

Disabilities and Computing lab on Wednesday, Oct. 29 show off

the latest adaptive computing

technologies available. Demonstrations of state-of-the-art

technologies in screen-reading, magnification, voice recognition, scanning

and reading and study software.

For more information about the Disabilities and Computing program,

see: www.dcp.ucla.edu

Back to top

|

|

Multimedia Links: |

|

|

|

|

The Universe

Studying the birth of the universe has

traditionally been a matter of theory, prediction, speculation, and more

recently, computer simulation. But today, astronomers such as UCLA’s Matt

Malkan are able to look deep into the sky and observe the real thing as it

appeared billions of years ago. Highly advanced telescopes, such as the

Hubble Space Telescope and the Keck in Hawaii, have dramatically changed the

field of astronomy. Thanks to these telescopes, Malkan and others can view

the infancy of very distant galaxies – back when their stars had just begun

to throw off detectable light.

And, thanks to UCLA’s Visualization

Portal, Malkan and other UCLA researchers are able to bring their work to

students, other researchers and broader audiences.

“The Portal allows us to look pretty

closely at these rather horrendously complex simulations. A supercomputer

can simulate literally millions of points in a volume of space, more than

most humans can comprehend.” The Portal offers a 3-dimensional moving

display of a computer simulation, making it easier for the human mind to

grasp.

“We’re using telescopes as time machines

so we can look back in the early days of the universe and see what these

young galaxies are doing,” Malkan said. “It takes the light rays that we’re

detecting more than 10 billion years to travel from where they started –

from when they were produced – and they leave their galaxies carrying a lot

of interesting information.”

The hope is that by studying these

faraway galaxies in their formative stages, astronomers will be able to

update scientific theory and prediction to answer lingering questions about

the structure of the universe and the evolution of our own galaxy - the

Milky Way.

Malkan’s work focuses on the photons –

or light particles – that infant galaxies produce, especially when a star is

created. Once the photons are collected on the Keck’s or the Hubble’s giant

mirror, Malkan works to determine how long they’ve existed and how far back

in the history of the universe he is peering. He’s able to make these

measurements by comparing the size of the universe when the light rays first

began their journey through space to the current size of our ever-expanding

universe. A relatively new calibration of the universe’s expansion has made

it possible for astronomers to put a fairly accurate time stamp on each of

the galaxies under observation. Malkan is working

closely with University of Zurich physics theorist Ben Moore, a proponent of

the “dark matter” theory of galaxy formation.

“The Portal is unique in letting us look

at these millions of points in space simultaneously,” Malkan said. “We can

watch the universe move forward or backward in time and see how it’s

changing before our eyes.” He also is able to move around inside the

simulated universe and see how it looks from different locations.

As a teaching tool, the Portal is

incomparable, Malkan added. “Watch this model in the Portal for five

minutes and you will understand better how our universe formed its

structure,” Malkan said.

|

|

Multimedia Links: OuickTime

Movie of the Universe:

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

UCLA performers in the foreground and Stanford

performers on Portal screen in the background.

Faculty Advisor David Beaudry (left) and student Alex Hoff.

A distributed, improvisational performance was

presented in the Visualization Portal earlier this month as an

Advanced Sound Design class project. Visiting Assistant Professor

David Beaudry, UCLA Department of Theater and Consulting Professor

Elizabeth Cohen, Information Studies, were faculty advisors on the

project which was designed to explore the possibilities and

challenges of creating a theatrical performance that occurs

simultaneously in two geographical locations. The performance

featured two groups of theatrical improvisers - one group at

Stanford’s Wallenberg Hall and the other at UCLA's Visualization

Portal.

The UCLA-Stanford Distributed Performance Project - created by

Visiting Assistant Professor David Beaudry, UCLA

Department of Theater, Consulting Professor Elizabeth Cohen,

Information Studies, and Stanford student Daniel

Walling - explored the possibilities and challenges of creating a

theatrical performance that occurs simultaneously in two

geographical locations. The performance featured two groups of

theatrical improvisers - one group at Stanford’s Wallenberg Hall

and the other at UCLA's Visualization Portal. A group of theater

sound design students from UCLA created immersive, multi-channel

environments and sound effects to support the improvisations. The

improvisational groups were connected by real-time audio and video

streams using the Internet2 backbone.

This project addressed several problems.

First, it attempted to overcome the geographical distance between

actors and designers during the performance.

Previous attempts to do this have fallen short due to network

latency issues that negatively impact the quality of sound and

video. Improving one was generally at the sacrifice of the other,

and therefore it was impossible to create a viable forum for

exchange. Recently, however, technological advances - particularly

in networking – allowed the project participants to create viable

virtual performance spaces: two physical locations joined by the

Internet to create a single, unique and fluid performance

environment.

The second goal was to address the problem of theatrical sound

design for both an improvised performance and a

networked performance. In traditional theater - where audiences

are located in a single location - sound designers build cues and

sound effects to support the action on stage. When building those

cues, designers usually have a script from which to design the

sound component and a predictable (i.e. linear) order of execution

during performance. The designers on this project were challenged

not only to create interesting and complex sound as a viable part

of an improvised performance, but they also had to design the

sound to be engaging in both geographical locations. This dual

challenge required a rethinking and restructuring of the

traditional methods used in theatrical sound design.

The third goal of this project was to effectively archive the

performance. How does one document and archive a

collaborative theatrical performance when the performance bodies

are in physically distinct locations and the collaborative

environment is virtual? How does one handle the archiving of eight

channels of audio, two video streams, nine actors, and two

audiences that were involved in this production? Documenting such

an event - not

just the performance but the process as well - was a formidable

challenge.

Project Goals

• To enable spatially distributed improvisational performance.

• To identify the technology that enables spatially distributed

performance.

• To understand what contributes to the perception of “ensemble.”

• To create a robust digital archive of the performance.

• To address the problem of theatrical sound design in entirely

improvised performances, as well as

network-based performances.

Several important components comprised this performance project.

Audio and Video Streaming

The audio connection is supported by the StreamBD software -

created by the SoundWIRE research group at Stanford’s CCRMA

- streaming uncompressed, multi-channel, professional-quality

audio over Internet2. StreamBD

is research-prototype software that provides low-latency

uncompressed audio streaming over high quality networks. It was

created to run on "CCRMAlized" computers running Linux/OSX. The

delays in streaming are only a few milliseconds above latency.

The project uses OpenMash for video streaming. OpenMash allows for

high-quality video streaming approximately

150 milliseconds above network latency. The project also uses

RTPtv, an open-source software package that runs

on Linux and Windows to send and receive high-bitrate "broadcast

quality" television - stereo audio and either

720x480 or 720x576 interlaced video (D1 video) or 352x240 or

352x288 progressive video (CIF) - over IP using

the IETF RTP protocol and M-JPEG (Motion JPEG).

Sound Design

The sound designers built custom software and interfaces for

real-time control and processing of both live and

prerecorded sound. The software uses IRCAM/Cycling74’s multimedia

programming environment, Max/MSP.

Faculty Advisors

David Beaudry, Visiting Assistant Professor, UCLA Department of

Theater Virtual Reality Audio Specialist & Audio Technologist,

UCLA Visualization Portal/Academic Technology Services

Elizabeth Cohen, Consulting Professor, Electrical Engineering,

Stanford University and Visiting Professor of Information Studies,

UCLA

Chris Chafe, Professor of Music, Stanford University, Director

CCRMA

People Involved

Actors - All of the performers are current members or alumni of

the Stanford Improvisers (SImps), coached by

Patricia Ryan.

Sound designers - Both sound designers were students in David

Beaudry’s Advanced Theater Sound Design class.

Archivists - All archivists were students in Elizabeth Cohen’s

class.

Coordinators:

UCLA – David Beaudry, Visiting Asst. Professor in Sound Design,

UCLA Dept. of Theater, and Virtual Reality

Audio Specialist, UCLA Visualization Portal.

Stanford University – Daniel Walling, Stanford University.

|

|

Multimedia Links: Video clips

of the performance:

This video clip shows a scene about a child and her

grandfather on a fishing trip. Actors from UCLA and Stanford simultaneously

act out the same scene. The catch is that the actors from each place take

turns making up dialog and acting out a story as the actors from the other

venue imitate them. On the clip you’ll see the UCLA performers in the

foreground and the Stanford actors on the Portal screen in the background.

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

zero@wavefunction: nano dreams and nightmares, a unique UCLA

Media Art/NanoScience collaboration was recently unveiled to a campus

audience at the Visualization Portal.

Created by Victoria Vesna, chair of

the Department of Design/Media Arts and James Gimzewski, a leading expert on

nanotechnology and a professor in UCLA’s Department of Chemistry and

Biochemistry, zero@wavefunction was conceived to help make nanoscience more

accessible and understandable to the broader public.

Buckyballs (shown in the photo) respond via sensors to

movement of a person’s shadow.

See a movie of buckyballs:

Quicktime

(high bandwidth) /

Quicktime

(low bandwidth)

For more information, go to:

http://notime.arts.ucla.edu/zerowave

|

|

Multimedia Links: OuickTime

Movie of Buckyballs

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Medical Students viewed a live orthopedic

surgery from the Visualization Portal as part of an

Internet2 member meeting hosted by USC. While doctors in the

UCLA Medical Center explained the surgery and answered

questions, students were also linked to an orthopedic

surgeon at Stanford’s SUMMIT, who used a 3-D hand model to

further explain the surgery, and to orthopedic surgeons at

the conference site. Goal of the project was to explore

teaching opportunities provided by Internet2.

|

|

Multimedia Links:

|

|

|

|

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

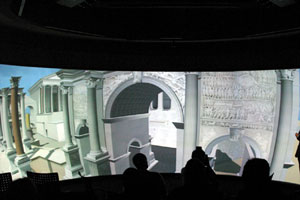

A 3-D immersive

computer model of the Roman Forum at the peak of its development

just prior to the fall of the Roman Empire was unveiled to a prestigious

audience in the Visualization Portal in January. The audience

included Dr. Paolo Liverani, Curator of Antiquities for the

Vatican Museums and scholars from UCLA and other

universities. Dr. Bernard Frischer, director of UCLA’s

Cultural Virtual Reality Lab, Dean Abernathy, chief modeler

of the visualization, and Dr. Diane Favro, CVR Lab associate

director for research and development, talked about the

value of the model and the huge effort involved in creating

it. Believed to be the most complex

digital model ever created of an archaeological site, the model shows 22

buildings and monuments based on the latest research on the Forum.

Only two of the structures – both badly damaged – survive in Rome today.

|

|

Multimedia Links:

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Professor John Dagenais, Department of Spanish and

Portuguese, uses the Visualization Portal to show a model of

the Cathedral of Santiago de Compostela, the Romanesque

pilgrimage cathedral of the medieval period.

The digital model

recreates the medieval cathedral and allows students and researchers to

experience the space of the cathedral as it would have been seen by

medieval pilgrims to Santiago de Compostela.

The simulation serves

as the background for Professor Dagenais' introductory course in

Medieval Spanish literature and as an ongoing research project for

students in a summer-session class studying and traveling the pilgrimage

route. The model is also beginning to be used by architectural

historians and archeologists to pose questions about the development of

the building over time and as a way of testing various scenarios for

archeological reconstructions. This restoration project shows the

building as it appeared when dedicated by Bishop Pedro Munoz on April 3,

1211 A.D.

Dean Abernathy,

Architect, a principal member of the

Cultural VR Lab and a Ph.D. candidate at UCLA, was chief modeler

on the project.

Doing some additional research work in the ATS

Visualization Lab on the Cathedral Santiago de Compostela project:

Dean Abernathy, UCLA Cultural VR Lab (left); Jose Suarez Otero, Archeologist and Conservator, Cathedral of

Santiago de Compostela (center); John Williams, Visiting Mellon Professor of the History of Art

and Architecture, University of Pittsburgh (right); John Dagenais, Professor of Spanish and Portuguese

(top).

Jose Suarez Otero, Archeologist and Conservator, Cathedral of

Santiago de Compostela (left); Dean Abernathy, UCLA Cultural VR Lab

(center); John Williams, Visiting Mellon Professor of the History of Art

and Architecture, University of Pittsburgh (right) |

|

Multimedia Links:

|

|

|

|

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

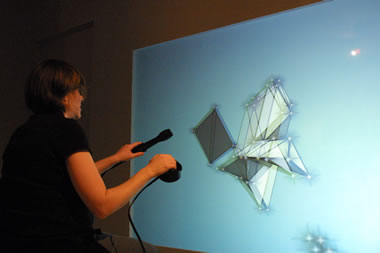

Musical Arts Doctoral Candidate David Beaudry used the

unique capabilities of the Visualization Portal for his

research on finding new forms of musical expression for

acoustic instruments through interactive digital technology.

His goal is to “return interactivity to musical

performance and generate new performance media,” which

involves designing pathways for communication between

acoustic instruments and computers. Learn more about David’s

work by viewing his video or reading his proposal.

|

|

Multimedia Links:

David Beaudry Sound Engine - Low

Bandwidth |

Beaudry Sound

Engine - High

Bandwidth

David Beaudry Sound Engine - Low

Bandwidth |

Beaudry Sound

Engine - High

Bandwidth

David Beaudry Sound Engine - Surestream

David Beaudry Sound Engine - Surestream

Read David Beaudry's Proposal

Read David Beaudry's Proposal |

|

|

|

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Academic Technology Services, Seeds University Elementary

School, and the UCLA Hammer Museum have finished the successful pilot of a

Visual Thinking Strategies program designed to introduce elementary teachers to

an innovative teaching strategy for young students. The pilot project also

explored a variety of educational and training possibilities offered by UCLA’s

Visualization Portal and Internet2. ATS is currently working with Linda Duke,

director of Education at the UCLA Hammer Museum, on a videotape of the project

that will be shown at the Internet2 Fall conference to be held at USC.

The Visual Thinking Strategy – developed by Psychologist

Abigail Housen and art educator Philip Yenawine - focuses on helping young

students learn to appreciate the arts and apply critical thinking skills learned

in art appreciation to other fields.

The project had three primary objectives – to develop a

model for VTS training that employs Internet2 and can be scaled up for use in

public schools, to expose pre-service teachers – through videoconferenced

participation – to a model of rich, probing discussion among colleagues about

their teaching, and to experiment using the VTS to prepare students to actively

engage with on-screen images that might be used in later distance-learning

initiatives.

“Organizers of this pilot believe there is strong evidence

to indicate that the skills and behaviors fostered in students by the VTS are

exactly those needed for a satisfying educational experience with other

computer-based instructional programs,” said Ms. Duke.

The VTS uses facilitated peer discussion of art images to

help students develop critical and creative thinking, evidence-based reasoning,

advanced looking, and communication skills.

“This is such a rich curriculum,” said Sharon Sutton,

coordinator of Technology and Outreach at Seeds UES. “The benefits for the

students and the teachers are just tremendous.” Ms. Sutton worked with Ms. Duke

to create the program at UES.

Nine UES teachers completed the training, along with

several UCLA graduate students and Los Angeles Unified School District staff

members. ATS videoconferenced the teacher training and debriefing sessions from

UES to the Visualization Portal and to the Massachusetts Institute of Technology

over Internet2.

|

|

|

|

|

|

|

|

Back to top |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

UCLA’s new Statistical Computing Web Portal - located at

http://statcomp.ats.ucla.edu - offers visitors an easy way to learn more

about commonly used statistics packages at the same time it provides an

opportunity for people who are interested in statistical computing to interact

with each other. One of UCLA’s Centers for Scholarly Interaction, the

UCLA Stat Computing Portal is a virtual meeting place for the UCLA research and

teaching community and for collaboration among statistical consulting centers

located around the world. See:

http://statcomp.ats.ucla.edu/propcollaboration.htm

The Stat Computing Portal, which provides links to web

sites for commonly used statistical packages such as SAS, Stata, and SPSS, can

also search across those sites to save users the time and effort of searching

each page individually.

The Stat Computing Portal augments the ATS Statistical

computing pages located at

/stat/ and other Statistical Consulting services (/stat/Qtr_Schedule.htm).

For more information about Centers for Scholarly

Interaction, go to:

www.itpb.ucla.edu and click on Strategic Plan Areas of Emphasis.

Back to top |

|

Multimedia Links:

|

|

|

|

|

|